Documentation of Wezen-Handeling

In this post, I am documenting the work Wezen-Handeling, as it was performed and livestreamed on May 7, 2021.

Documentation of Wezen - Handeling

This work is a sequel to Wezen - Gewording. The sound instruments that I use are similar to ones I developed for that piece. The code base and the processing algorithms have been redeveloped for this new piece.

In the work I am using inspiration from the work of different artists, whose work I studied as part of writing the book Just a question of mapping:

- pose detection: inspired by Cathy van Eck and Roosna & Flak

- flick detection: inspired by Roosna & Flak

- recording / playback of control data: inspired by STEIM’s The Lick Machine

- button processing: inspired by Erfan Abdi’s approach for chording

- the use of some control data to set starting parameters and others for continuous control is inspired by Jeff Carey.

- matrix / weights to connect input parameters to output parameters is inspired by Alberto de Campo’s Influx.

Sound layers

In Wezen - Handeling there are two different layers of sound:

- sound instruments triggered directly from movements with the left hand and controlled with the right hand

- sound instruments controlled from recorded triggers and movement data. This recorded data can be looped, sped up and slowed down, the playback length and the starting point in the recorded buffer can be changed, and additional parameters can be modulated.

The controllers

The controllers that I use are open gloves with five buttons each (one for each finger) and a nine-degrees-of-freedom sensor. The data from these gloves is transmitted via a wireless connection to the computer, where it is translated to Open Sound Control data and received by SuperCollider. The core hardware for the gloves is the Sense/Stage MiniBee.

The gloves were orginally designed and created for a different performance, Wezen - Gewording, where the openness of the glove was a necessity, since I also needed to be able to type to livecode. The nine-degrees-of-freedom sensor was added to this glove later: in Wezen - Gewording I only made use of the built-in 3-axis accelerometer of the Sense/Stage MiniBee.

Of the right controller the gyroscope is not working, so effectively, that is a six-degrees-of-freedom sensor. During the development of the work, I discovered this defect, and it motivated my choice to only use flicks in the left hand, and use the right hand for continuous control.

Preprocessing of data

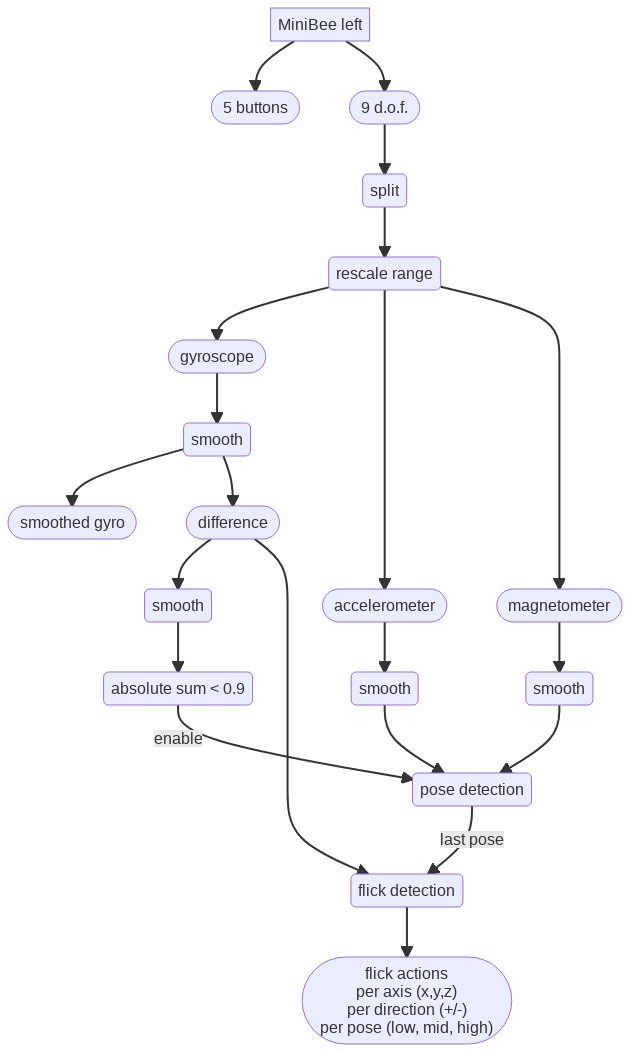

To preprocess the data from the gyroscope, accelerometer and magnetometer, I first split the data into these three different streams (each having 3 axes), and do a range mapping with a bipolar spec: that is I am using a non-linear mapping from the integer input data range, to a range between -1 and 1, in such a way that the middle point is at 0, and the resulting range feels linear with regard to the movements I make.

The for each of the three, I apply a exponential smoothing filter. In certain cases I use the difference output of this filter: the raw input value minus the smoothed filter output. This difference output is then processed with another exponential smoothing filter. In some cases this second filter has another parameter for the signal becoming larger, versus the signal becoming smaller.

Poses and flicks

To start and stop sounds, I am using a combination of flick detection and pose detection.

The pose selects the instrument that will be triggered. The pose detection algorithm makes use of prerecorded data of three different poses. The continuous smoothed data from the accelerometer and magnetometer is compared with the mean of the prerecorded data to determine whether the current value is within an factor of measured variation of the prerecorded samples. If that is the case, the pose is matched.

The flick detection makes use of the difference of the gyroscope data with its smoothed version. If this difference crosses a certain threshold, an action is performed. Different thresholds can be set for different axes and different directions of the data. The detected pose is used as additional data to determine the action to trigger.

To avoid that movement to flick affects the pose detection, the pose detection is only enabled when the summed smoothed difference signal of the gyroscope data is below a certain value.

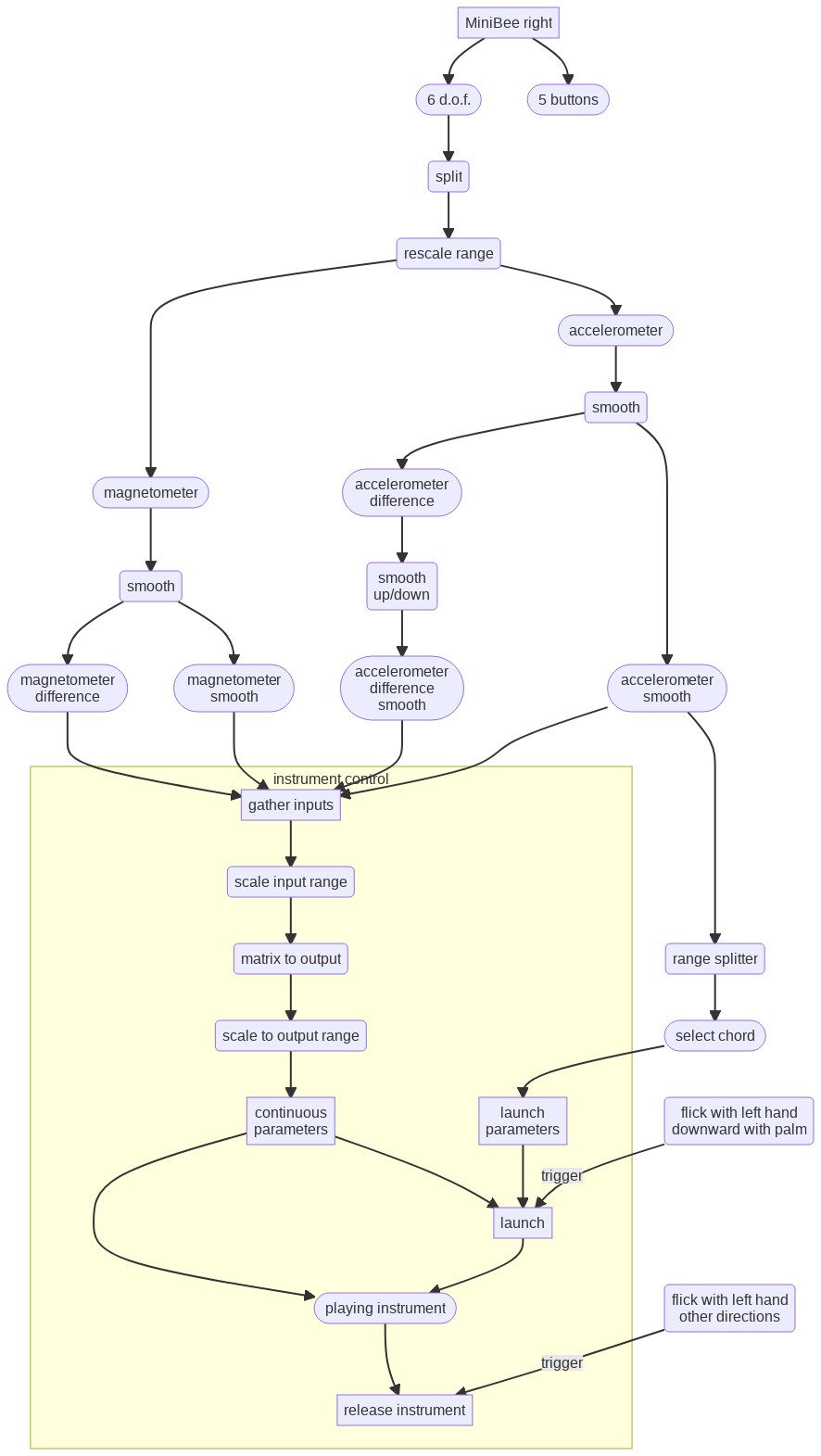

Instrument launching and control

Instruments are started, or launched, with flicks of the left hand when the palm of the hand is moved downwards (if you look at the arm held horizontally forwards with the back of the hand pointing up). Other flicks (upward, and those rolling the wrist to left or right) stop the instrument again.

The right hand data is used to control the different parameters of the sound:

- The position of the right hand when a sound is started selects a chord (a set of multiplication factors for the frequency of the sound) with which an instrument is started. This chord cannot be changed after launch.

- The movement of the right hand then controls different parameters continuously as long as the sound is playing.

The input for control are both the smoothed and the difference with the smoothed data from the accelerometer and the magnetometer.

For each instrument there are differences in how exactly the different parameters affect the sound. Some concepts that prevail are:

- smoothed values are used for frequency, filter frequency, pulse width, and panning position.

- difference values are used for amplitude and filter resonance.

Lick recording and playback

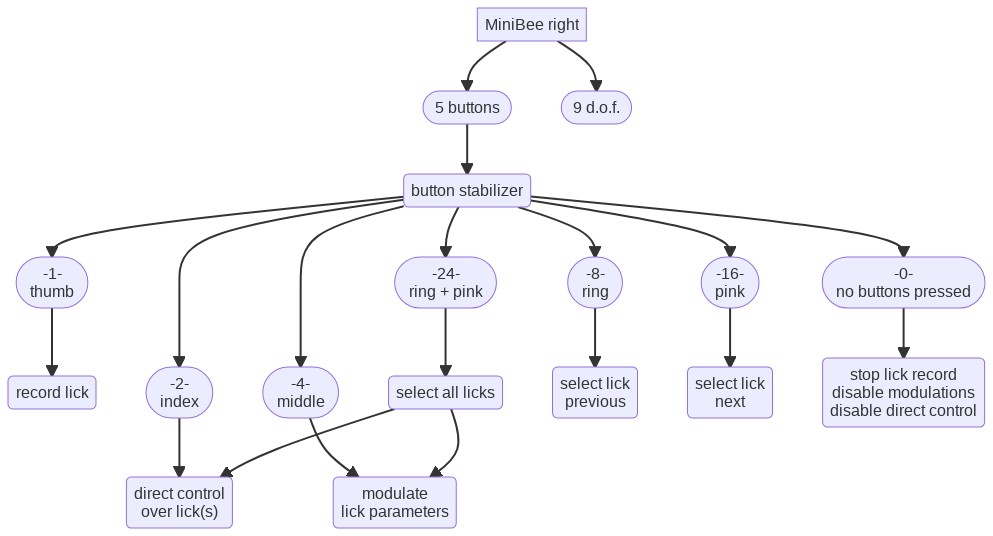

The buttons on both gloves are used to control the Lick Machine.

While the thumb button on the right hand glove is pressed, the data that is used for instrument control and the output data from the flick detector and the pose detector (at the moment of flicking) is recorded into a lick buffer. This happens as long as the button is pressed. At a button press, the buffer is first cleared and then recorded into. The data is always recorded into the current lick buffer.

In total there are 16 lick buffers. With the ring and pink buttons of the right glove the current lick buffer can be changed.

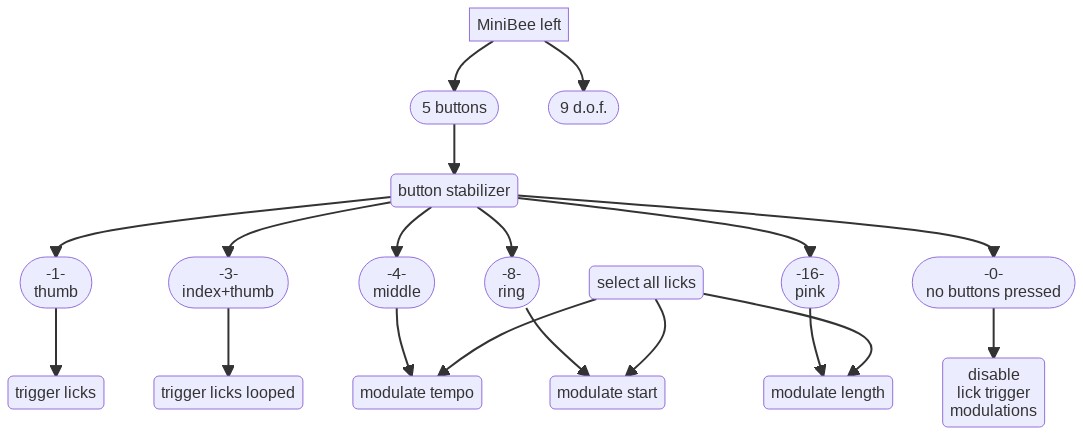

When the thumb button on the left hand glove is pressed flicks with the left hand trigger playback of licks, rather than instruments. The pose and flick axis and direction determines which lick will be playing back. When also the index button of the left glove is pressed, the lick will play in looped mode, otherwise it will just play once and stop.

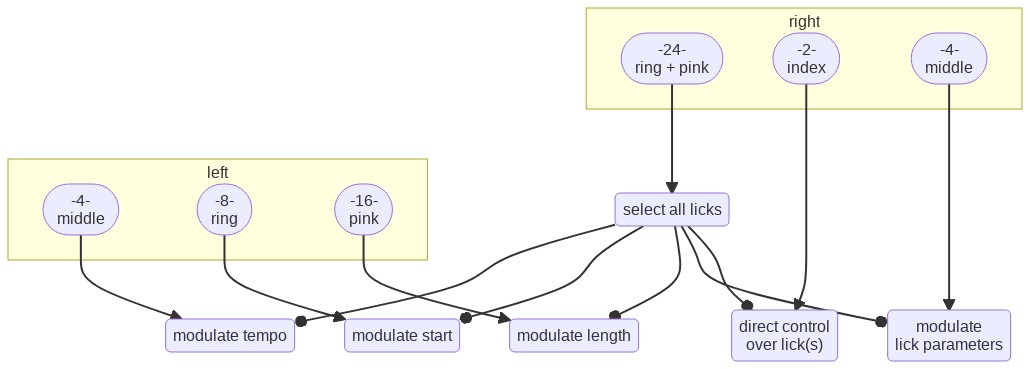

Lick modulation

The playback of the licks can then be modulated:

- the index finger of the right glove: enables replacing the recorded data with the current data from the right hands’ accelerometer and magnetometer.

- the middle finger of the right glove: enables controlling additional paramaters of the sound with data from the right hands’ accelerometer and magnetometer.

- the middle finger of the left glove: enables changing the tempo of playback with an up and down movement of the right arm.

- the ring finger of the left glove: enables changing the start position in the lick buffer by rolling the right arm.

- the little finger of the left glove: enables changing the playback length of the lick buffer by rolling the right arm.

When these three left glove buttons are pressed simultaneously, all three parameters are modulated at the same time.

The modulation only takes effect on the currently selected lick. If both ring and pink buttons of the right glove are pressed, the modulation affects all licks simultaneously.

Flowchart images created with the Mermaid live editor